Insights for leaders operating under pressure.

Our Thoughts

Operator-led thinking on systems, intelligence, and execution, designed to help leaders move with clarity when the stakes are real.

Our Thoughts

Operator-led thinking on systems, intelligence, and execution, designed to help leaders move with clarity when the stakes are real.

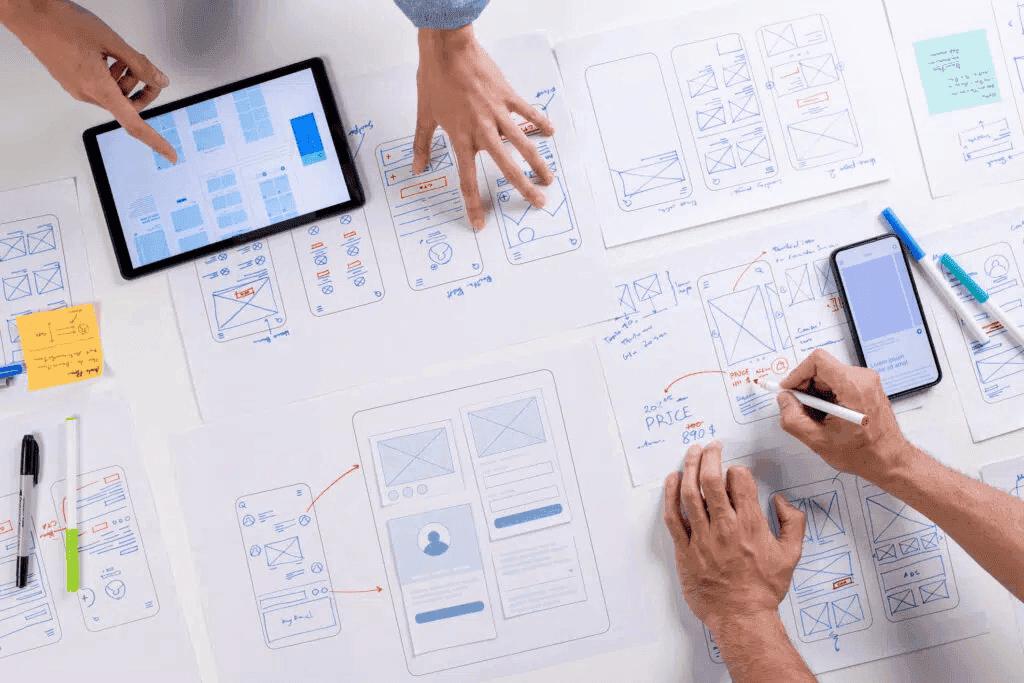

Every release feels like a gamble when quality signals are fragmented. In this article by Sparq Quality Engineering Lead Jarius Hayes, he breaks down what changes when quality operates as a connected system, and what engineering leaders at operationally complex organizations are doing to make that shift measurable.

"The era of static technology is over. The only sustainable advantage is the ability to adapt."

Derek Perry, "The AdaptiveOps Shift"

Practice Leader, Solutions Consulting

For a deeper dive, read