What is Data Modeling?

Data modeling is the process of analyzing, defining and restructuring all the different data types your business collects and produces, as well as the relationships between those bits of data. By using different tools and techniques, data modeling helps the organization to understand their data. The resulting models are useful to create visual representations of data as it’s captured, stored and used at your business. As your business determines how data is used and when, the data modeling process becomes an exercise in understanding and clarifying your data requirements.

The Benefits of Data Modeling

By modeling your data, you can document what types of data you have, how you use it, and the data management requirements surrounding its usage, protection, and governance. The benefits of data modeling include:

- Creating a framework for collaboration between your data teams.

- Identifying opportunities for enhancing business processes by specifying data needs and applications.

- Optimizing IT and process investments through effective planning.

- Minimizing errors (and redundant data entry prone to errors) while enhancing data integrity.

- Improving the speed and efficiency of data retrieval and analytics by planning for capacity and expansion.

- Establishing and monitoring key performance indicators customized to your business goals.

What Different Types of Testing Are:

- Unit testing: This entails testing each component of the data model independently to verify that it functions as anticipated.

- Integration testing: This involves testing how different components of the data model interact with one another.

- Data validation testing: This involves testing that the data stored in the data model satisfies specific criteria.

- Performance testing: This entails testing the data model’s performance under various conditions, such as heavy loads or with large datasets.

- Security testing: This involves testing the data model’s security, including ensuring the protection of sensitive data and limiting user access to authorized data.

Why Testing Our Models is Important

Testing in data modeling is crucial for several reasons:

- Business logic validation: Testing can help verify if the data model accurately reflects the business requirements and logic. This ensures that data is structured appropriately to meet the organization’s needs.

- Early error identification: Testing can detect errors or issues in the model design before it is implemented in production. It is easier and more cost-effective to correct these problems in the early stages of development.

- Ensuring data integrity: Testing can ensure that data modeling does not lead to inconsistencies or redundancies that could affect data integrity. This is especially important in systems where data accuracy and consistency are critical.

- Performance optimization: By testing the data model, it is possible to identify areas where performance improvements can be made, such as query optimization, proper indexing, and normalization or denormalization as needed.

- Change validation: When changes are made to the data model, testing can ensure that these changes do not introduce unexpected errors and that the system continues to function as intended.

- Compliance with requirements: Testing can help ensure that data modeling complies with regulatory and compliance requirements applicable to the industry or business.

In summary, testing in data modeling is a “must”

Nothing is Better Than an Example

An example can often illuminate a concept more effectively than any explanation. Let’s see an example in DBT (a tool that we use and love at Sparq 😀)

What is DBT?

dbt, or the data build tool, is a development framework that revolutionizes data transformation by combining modular SQL with software engineering best practices. This tool empowers data analysts to perform data engineering tasks, transforming warehouse data using simple select statements. With dbt, developers can create entire transformation processes with code, write custom business logic using SQL, automate data quality testing, deploy code, and deliver trusted data alongside comprehensive documentation.. dbt enables anyone familiar with SQL to build high-quality data pipelines, thus reducing the barriers to entry that previously limited staffing capabilities for traditional technologies.

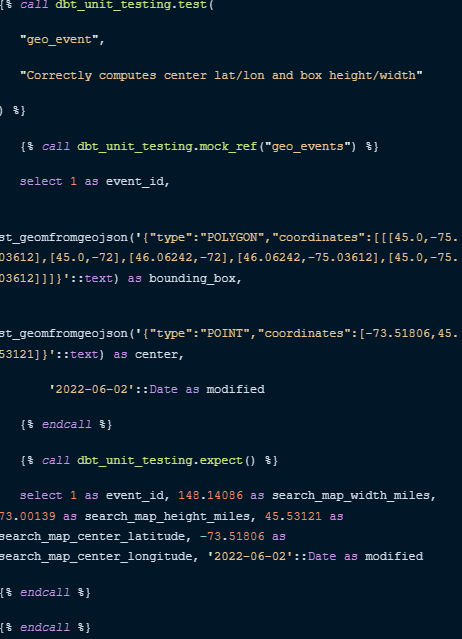

A testing example in DBT:

As you can see, it is incredibly straightforward to generate tests using this tool, which is precisely why it has become indispensable to us. Its user-friendly interface and robust features streamline the entire process, saving us valuable time and effort. This tool empowers us to efficiently assess and evaluate various aspects, ensuring the quality and effectiveness of our work.

Learn more about our data, analytics & AI capabilities.

Snowflake Summit 2025 Announcements

Snowflake Summit 2025’s latest announcements made it clear: the path to genuine AI-driven impact hinges on frictionless access to data, the ability to act on it with clarity, and absolute confidence in its protection. Learn more about how they're making that happen for customers in this article.

How ChatPRD Helps Build Better Stories (and a Stronger Team)

When user stories are vague, it slows down delivery, trust, and momentum. This article by Senior Product Strategy Consultant Traci Metzger shows how she used a lightweight, AI-guided system (ChatPRD) to write clearer, developer-ready requirements that actually accelerated execution.

QA in the Age of AI: The Rise of AI-Powered Quality Intelligence

As organizations push code to production faster, respond rapidly to new customer needs and build adaptive systems, the expectations on quality have changed. It's no longer enough to simply catch bugs at the end of the cycle. We’re entering an era where quality engineering must evolve into quality intelligence and organizations adopting quality intelligence practices are reporting measurable gains across key delivery metrics. Learn more in this article by Principal Engineer Jarius Hayes.

Operational Efficiency in the AI Era: What Matters and What Works

Ever wonder how leading teams are cutting costs without cutting corners? Hint: it starts with AI. In this article by Principal Delivery Manager Kabir Chugh, learn how AI is powering smarter ops, faster deployments, and measurable savings across industries.